I have quipped a few times that my biggest problems with Swift so far have to do with struggles in the debugger. It seems slow, inaccurate, harder to use than in Objective C. Some of this is just a learning curve, but other aspects seemed fundamentally broken. I whined on Twitter about a scenario in which lldb seemed utterly unaware of one of my variables:

What do they say about repeating the same steps expecting different results? pic.twitter.com/lw8LP8T7qg

— Daniel Jalkut (@danielpunkass) April 30, 2016

A kind Apple employee, Kate Stone, followed up with me and ultimately encouraged me to file a bug:

@danielpunkass Definitely interested in 7.3.1 seed results! Even without repro a radar makes it easier to ask clarifying questions.

— Kate Stone (@k8stone) April 30, 2016

I obliged, filing Radar #26032843. Today, Apple got back to me with a followup, suggesting rather gently that I may have neglected to disable optimization in my target. Rookie move! The kind of behavior I was seeing in the debugger is exactly what happens when lldb can’t make as much sense of your code because of inlined functions, loops that have been restructured, etc.

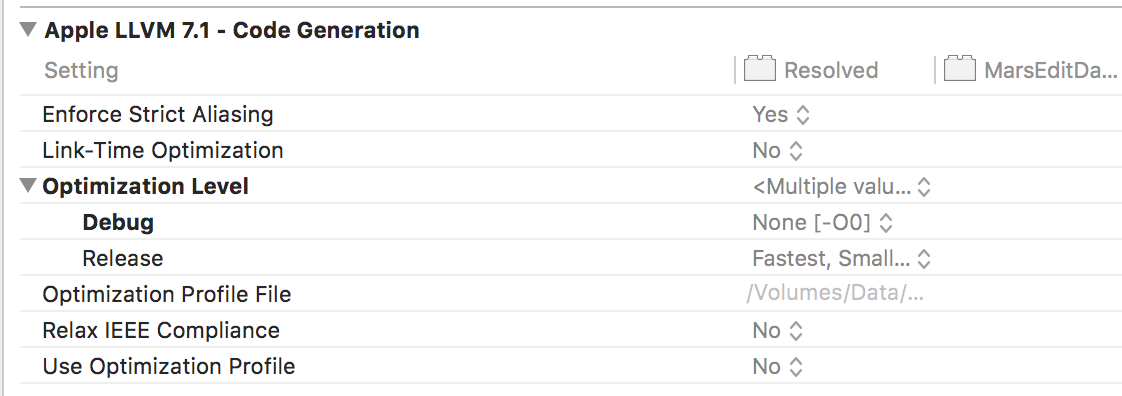

In fact, I had correlated the symptoms with such a problem, but when I went to check on the status of my optimization settings, everything looked fine. Why? Because I was looking, by habit, at the Clang LLVM “Code Generation” settings for optimization:

See? Optimization disabled. Just as it is for all my projects, and all my targets, because I define it once in my centralized Debug “.xcconfig” file, to be sure I never screw it up:

// We only specify an optimization setting for Debug builds. // We rely upon Apple's default settings to produce reasonable // choices for Release builds GCC_OPTIMIZATION_LEVEL = 0

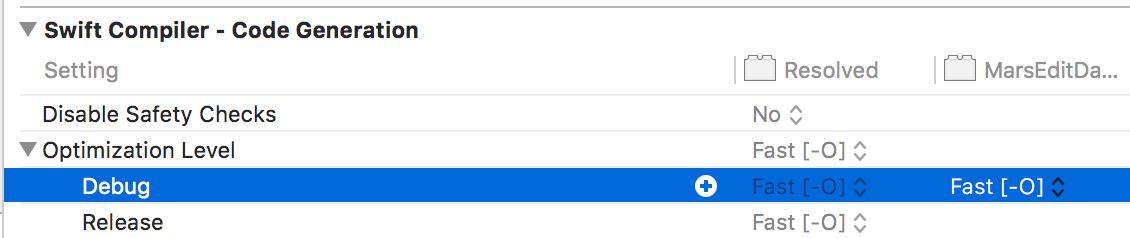

So why does debugging Swift fail so hard for me? Because Swift doesn’t use that optimization setting. Scrolling down a little farther, I find the culprit in Swift’s own compiler settings section:

So the lesson is that new Swift developers coming from a legacy of Objective C, C++, or C development need to take stock of Swift compiler settings because they are liable to be rooted in completely different build settings. On the one hand, I’m glad Apple is finally able to get away from a build setting like “GCC_OPTIMIZATION_LEVEL” (though keeping the name in the GCC -> LLVM transition prevented problems like this back then), but on the other hand, it’s kind of annoying to have to express high level directives that affect whether my code will be debuggable or not using multiple build settings.

At least, because I am not an animal, this will also only ever need to be done once, with an edit to the pertinent “.xcconfig” file:

// We only specify an optimization setting for Debug builds, we rely upon // Apple's default settings to produce reasonable choices for Release builds GCC_OPTIMIZATION_LEVEL = 0 SWIFT_OPTIMIZATION_LEVEL = -Onone

Now if you’ll excuse me I’m going to take a tour of other Swift-specific compiler settings to make sure I’m not shooting myself in the foot in some other way!