I was inspired today, by a question from another developer, to dig into Xcode’s performance testing. This developer had observed that XCTestCase exposes a property, defaultPerformanceMetrics, whose documentation strongly suggests can be used to add additional performance metrics:

This method returns XCTPerformanceMetric_WallClockTime by default. Subclasses of XCTestCase can override this method to change the behavior of measureBlock:.

If you’re not already familiar, the basic approach to using Xcode’s performance testing infrastructure is you add unit tests to your project that wrap code with instructions to measure performance. From the default unit test template:

func testPerformanceExample() {

// This is an example of a performance test case.

self.measure {

// Put the code you want to measure the time of here.

}

}

Depending on the application under test, one can imagine all manner of interesting things that might be useful to tabulate during the course of a critical length of code. As mentioned in the documentation, “Wall Clock Time” is the default performance metric. But what else can be measured?

Nothing.

At least, according to any header files, documentation, WWDC presentations, or blunt Googling that I have encountered. There is exactly one publicly documented Xcode performance testing metric, and it’s XCTPerformanceMetric_WallClockTime.

I was curious whether supporting additional, custom performance metrics might be possible but under-documented. To test this theory, I added “beansCounted” to the list of performance metrics returned from my XCTestCase subclass. For some reason I couldn’t get Swift to accept the XCTPerformanceMetric pseudo-type, but it allowed me to override as returning an array of String:

override static func defaultPerformanceMetrics() -> [String] {

return ["beansCounted"]

}

When I build and test, this fails with a runtime exception “Unknown metric: beansCounted”. The location of an exception like this is a great clue about where to go hunting for information about whether an uknown metric can be made into a known one! If there’s a trick to implementing support for my custom “beansCounted” metric, the answer lies in the method XCTestCase’s “measureMetrics(_: automaticallyStartMeasuring: forBlock:)”, which is where the exception was thrown.

By setting a breakpoint on this method and stepping through the assembly in Xcode, I can watch as the logic unfolds. To simplify what happens: first, a list of allowable metrics is computed, and then the list of desired metrics is iterated. If any metric is not in the list? Bzzt! Throw an exception.

I determined that things are relatively hardcoded such that it’s not trivial to add support for a new metric. I was hoping I could implement some magic methods in my test case, like “startMeasuring_beansCounted” and “stopMeasuring_beansCounted”

but that doesn’t appear to be the case. The performance metrics are supported internally by a private Apple class called XCTPerformanceMetric, and the list of allowable metrics is derived from a few metrics hardcoded in the “measureMetrics…” method:

- “com.apple.XCTPerformanceMetric_WallClockTime”

- “com.apple.XCTPerformanceMetric_UserTime”

- “com.apple.XCTPerformanceMetric_RunTime”

- “com.apple.XCTPerformanceMetric_SystemTime”

As well as a bunch of others exposed by a private “knownMemoryMetrics” method:

- “com.apple.XCTPerformanceMetric_TransientVMAllocationsKilobytes”

- “com.apple.XCTPerformanceMetric_TemporaryHeapAllocationsKilobytes”

- “com.apple.XCTPerformanceMetric_HighWaterMarkForVMAllocations”

- “com.apple.XCTPerformanceMetric_TotalHeapAllocationsKilobytes”

- “com.apple.XCTPerformanceMetric_PersistentVMAllocations”

- “com.apple.XCTPerformanceMetric_PersistentHeapAllocations”

- “com.apple.XCTPerformanceMetric_TransientHeapAllocationsKilobytes”

- “com.apple.XCTPerformanceMetric_PersistentHeapAllocationsNodes”

- “com.apple.XCTPerformanceMetric_HighWaterMarkForHeapAllocations”

- “com.apple.XCTPerformanceMetric_TransientHeapAllocationsNodes”

How interesting! There are a lot more metrics defined than the single “wall clock time” exposed by Apple. So, should we use them? Official answer: no way! This is private, unsupported stuff, and can’t be relied upon. Punkass Daniel Jalkut answer? Why not? They’re your tests, and your the only one who will get hurt if they suddenly stop working. In my opinion taking advantage of private, undocumented system behavior for private, internal gain is much different than shipping public software that relies upon such undocumented behaviors.

I modified my unit test subclass to return a custom array of tests based on the discoveries above, just to test a few:

override static func defaultPerformanceMetrics() -> [String] {

return [XCTPerformanceMetric_WallClockTime, "com.apple.XCTPerformanceMetric_TransientHeapAllocationsKilobytes", "com.apple.XCTPerformanceMetric_PersistentVMAllocations", "com.apple.XCTPerformanceMetric_UserTime"]

}

The tests build and run with no exception. That’s a good sign! But these “secret peformance tests” are only useful if they can be observed and tracked the way the wall clock time can be. How does Xcode hold up? I made my demonstration test purposefully impactful on some metrics:

func testPerformanceExample() {

self.measure {

for _ in 1..<100 {

print("wasting time")

}

let _ = malloc(3000)

}

}

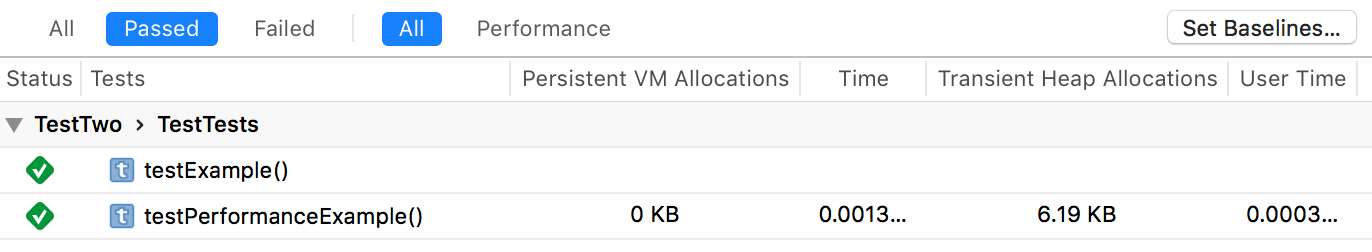

Now when I build and test, look what shows up in the Test navigator’s editor pane:

Look at all those extra columns! And if I click the “Set Baselines…” button, then tweak my function to make it substantially less performant:

func testPerformanceExample() {

self.measure {

for _ in 1..<10000 {

print("wasting time")

}

let _ = malloc(300000)

}

}

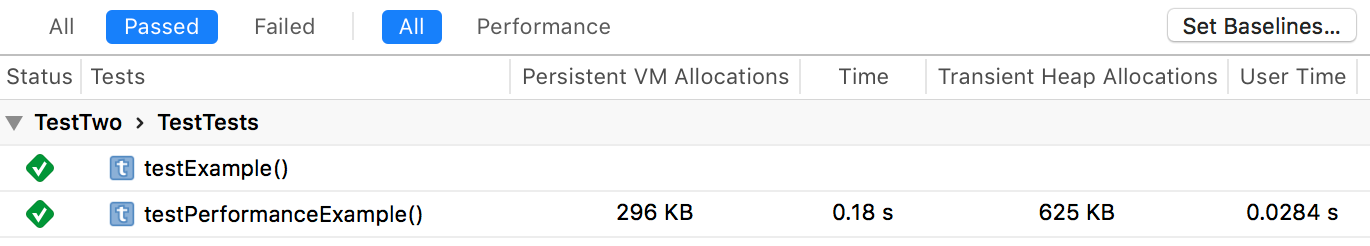

Now the columns have noticably larger numbers:

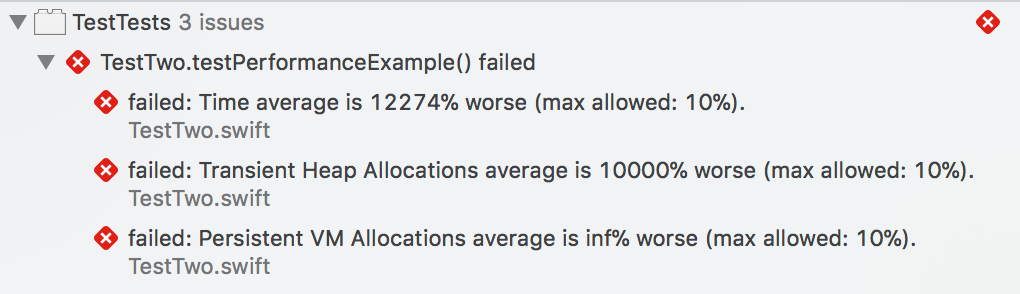

But more importantly, the test fails:

I already mentioned that by any official standard, you should not take advantage of these secret metrics. They are clearly not supported by Apple, may be inaccurate or have bugs, and could outright stop working at any time. I also said that, in my humble opinion, you should feel free to use them if you can take advantage of them. The fact that they are supported so well in Xcode probably implies that groups internal to Apple are using them and benefiting from them. Your mileage may vary.

The only rule is this: if Apple does do anything to change their behavior, or you otherwise ruin your day by deciding to play with them, you shouldn’t blame Apple, and you can’t blame me!

Enjoy.