Over the past few months I have become quite taken by Auto Layout, Apple’s powerful layout specification framework for Mac and iOS.

For the past few years I’ve heard both that Auto Layout is brilliant and that it has a frustrating learning curve. I can now attest that both of these are true.

One of the problems people have complained most about with respect to Auto Layout is the extent to which Xcode’s Interface Builder falls short in providing assistance specifying constraints. As many people have noticed, Apple is addressing these complaints slowly but surely. Xcode 5’s UI for adding constraints and debugging layout issues is dramatically superior to the functionality in Xcode 4.

Still, there is much room for improvement.

One frustrating behavior arises when one deigns to move a large number of views from one position in a view hierarchy to another. For example, the simple and common task of collecting a number of views and embedding them in a new superview. This task is so common that Apple provides a variety of helpful tools under Editor -> Embed In to streamline the task.

Here’s the big downer with respect to constraints: whenever you move a view from one superview to another, all of the constraints attached to the old superview, constraints that you may have laboriously fine-tuned over hours or days, simply disappear. Poof!

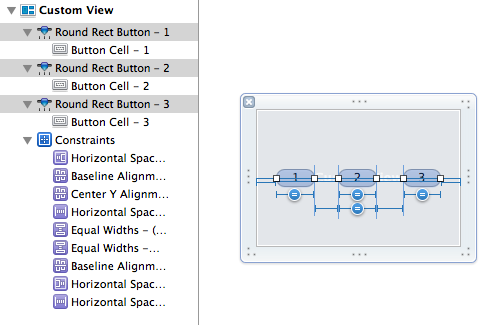

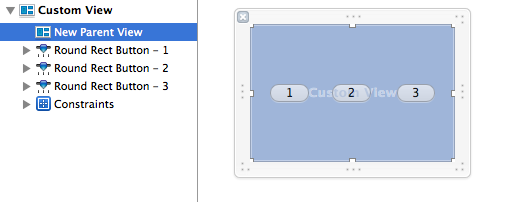

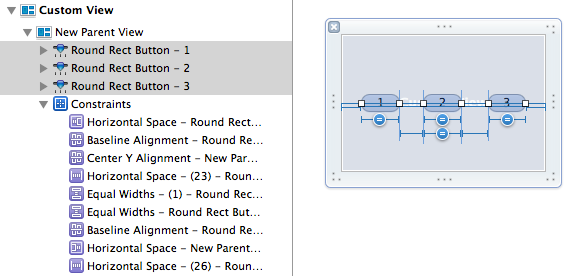

This isn’t such a big deal when your constraints happen to match what Interface Builder suggests for you. But even very simple interfaces may have a fairly large number of constraints. Consider this contrived example, in which three buttons are arranged to roughly share the width of a container view:

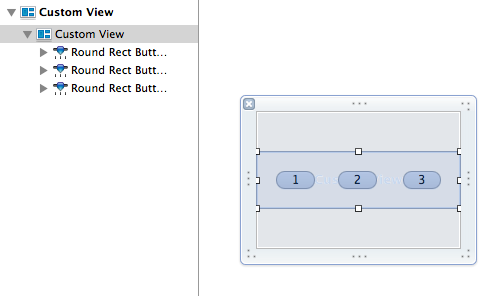

Nine constraints, and the removal or misconfiguration of any one will lead to incorrect layout in my app. Yet simply embedding the views in a custom view wipes them all out:

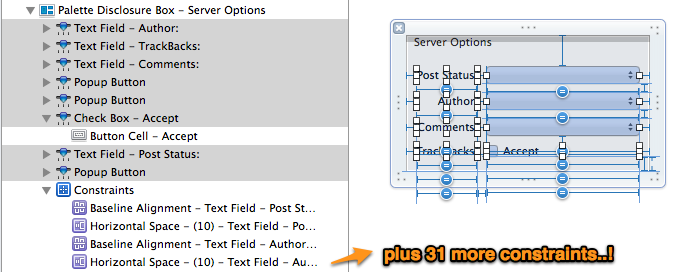

This problem is bad enough in the contrived scenario about, but in my much more complicated interfaces, a collection of views might comprise 50 or more customized constraints. Here’s a “simple” subsection of MarsEdit’s post editor side panel:

Having to piece those all together again just because I want to rearrange some views, well it makes me mad. And when I get mad? I get … innovative!

A Pattern For Transplanting Constraints

Thanks to recent changes in Interface Builder’s file-format for xib files, it’s more straight-forward than ever to hand-tune the contents of a xib file outside of Xcode. It should go without saying that in doing so, you take your fate into your hands, etc., etc. But if you’re anything like me, a little hand-editing in BBEdit is worth the risk if it saves hours of much more intricate hand-editing back in Interface Builder. You’ll save valuable time and also reduce the very real risk of missing some nuanced detail as you try to reimplement everything by hand.

So without further ado, here are steps you can follow to transplant a set of views in a xib file such that the constraints from the old view follow over to the new view:

- Make a backup of your .xib file. You’re going to screw this up at least once, so you’ll want something “sane” to fall back on when you do.

- In Interface Builder, create the parent view if it doesn’t exist already. Give it a real obvious name like “New Parent View” so you’ll be able to spot it later:

- Save changes in IB to make sure the .xib file is up-to-date.

- Open the .xib file in a text editor such as BBEdit, or right-click the file in Xcode and select Edit As -> Source Code to edit as text right in Xcode.

- Locate the new parent view by searching on the name you gave it. For example, in my sample project the view looks like this in the text file:

<customView ... id="5M5-9Q-zMt" userLabel="New Parent View"> ... </customView>

- Locate the old parent view. If you have trouble, you may want to give it a custom name as well before saving again in IB. In my trivial example, the old parent is the first and only top-level view in the xib file, so it looks like this:

<customView id="1"> ... </customView>

- Take note of the id for the old parent view and the new parent view. We’re going to need these in a minute to tie up some loose ends.

- Locate the constraints from the old parent view, cut them, and paste them into the new parent view’s XML content. Again in my case it’s trivial because I want all the constraints from the old parent view. I cut them out of the old and into the new so things looks something like this:

<customView ... id="5M5-9Q-zMt" userLabel="New Parent View"> ... <constraints> <constraint firstItem="rfg-hN-1Il" firstAttribute="leading" secondItem="1" secondAttribute="leading" constant="20" symbolic="YES" id="LOu-nX-awU"/> <constraint firstItem="8Ju-hM-RbA" firstAttribute="baseline" secondItem="Sgd-MR-FMw" secondAttribute="baseline" id="Mwc-6y-uaP"/> ... </constraints> </customView> - Locate the subviews themselves from the old parent view, and cut and paste them in the same way, making sure they reside in a <subviews> node in the new parent view. You should now have a new parent view whose xml topology looks something like this:

<customView ... id="5M5-9Q-zMt" userLabel="New Parent View"> <rect ... /> <autoresizingMask ... /> <subviews> ... your transplanted subviews here ... </subviews> <constraints> ... your transplanted constraints here ... </constraints> </customView>

We’re close! But not quite finished. If you save and try to use the .xib now, you’ll find that Interface Builder rejects it as corrupted. What’s wrong? The constraints we transplanted mostly reference only the other views that we transplanted, but some of them also reference the old parent view.. To fix the integrity of these constraints, we need to update them to reference the new parent view instead.

- Refer back to the Interface Builder “id” values you noted in step 7. We need to locate any reference to the old parent view and adjust it so it references the new parent view. In our example, the old parent view id is “1” and the new parent view id is “5M5-9Q-zMt”. Specifically, we’re looking for attributes on our transplanted constraints where the “firstItem” or “secondItem” references the old parent ID:

<constraint firstItem="rfg-hN-1Il" firstAttribute="leading" secondItem="1" secondAttribute="leading" constant="20" symbolic="YES" id="LOu-nX-awU"/>

Change the value secondItem=”1″ to secondItem=”5M5-9Q-zMt”, and repeat for any other instances where the old parent view is referenced.

- Save the text-formatted .xib file, cross your fingers, and hope you didn’t make any mistakes.

- Reopen the .xib file in Interface Builder, or if you’re already in Xcode’s text editor, right-click the file and select Open As -> Interface Builder.

If your combination of luck and skill paid off as planned, then you’ll see something beautiful like this:

All of my views, now situated within the new parent view, and the desired constraints in-tact.

I hope this helps serve as a specific reference for folks in the same boat as I am in, wanting to shuffle views around without losing the hard work I’ve put into my constraints. And I hope it also serves to inspire you to think beyond the limitations of our tools. As great as Xcode, Interface Builder, and a host of other essential technologies are, they often fall short of desired behavior. When they do, it’s often in our power to work around the issues and carry on developing software as effectively as we know how.