In the nearly four years since Swift was announced at WWDC 2014, Mac and iOS developers have embraced the language with decreasing reluctance. As language features evolve, syntax stabilizes, and tooling improves, it’s easier than ever to leap into full-fledged Swift development.

Several months ago, I myself made this leap. Although the vast majority of my Mac source base consists of Objective-C files, I have enjoyed adding new source files in Swift, and even converting key files to Swift either as an exercise, or when I think I will gain specific advantages.

One remaining challenge in Swift is the lack of ABI stability. In layperson’s terms: the lack of ABI stability prevents compiled Swift code from one version of the Swift compiler and runtime from linking with and running in tandem with Swift code compiled for another version.

For most developers, this limitation simply means that the entire Swift standard library, along with glue libraries for linking to system frameworks, needs to be bundled with the application that is built with Swift. Although it’s a nuisance that several megabytes of libraries must be added to every single Swift app, in the big scheme of things, it’s not a big deal.

A worse consequence is the number of pitfalls that ABI instability present, that are difficult to understand intuitively, and in many cases impossible, or at least dangerous, to work around. These pitfalls lie mainly in areas where developer code is executed on behalf of a system service, in a system process. In this context, it is not possible for developers to ensure that the required version of Swift libraries will be available to support their code. Game over.

On the Mac, system integration plugins are a typical scenario for this problem. While iOS has evolved with a strong architecture for running developer code in standalone, sandboxed processes, on the Mac there are still many plugins that run in a shared system process alongside code from other developers. These plugins run the gamut from arcane, rarely used functionality, to very common, user-facing features where a plugin is effectively required in order to satisfy the platform behaviors prescribed by Apple and expected by end-users.

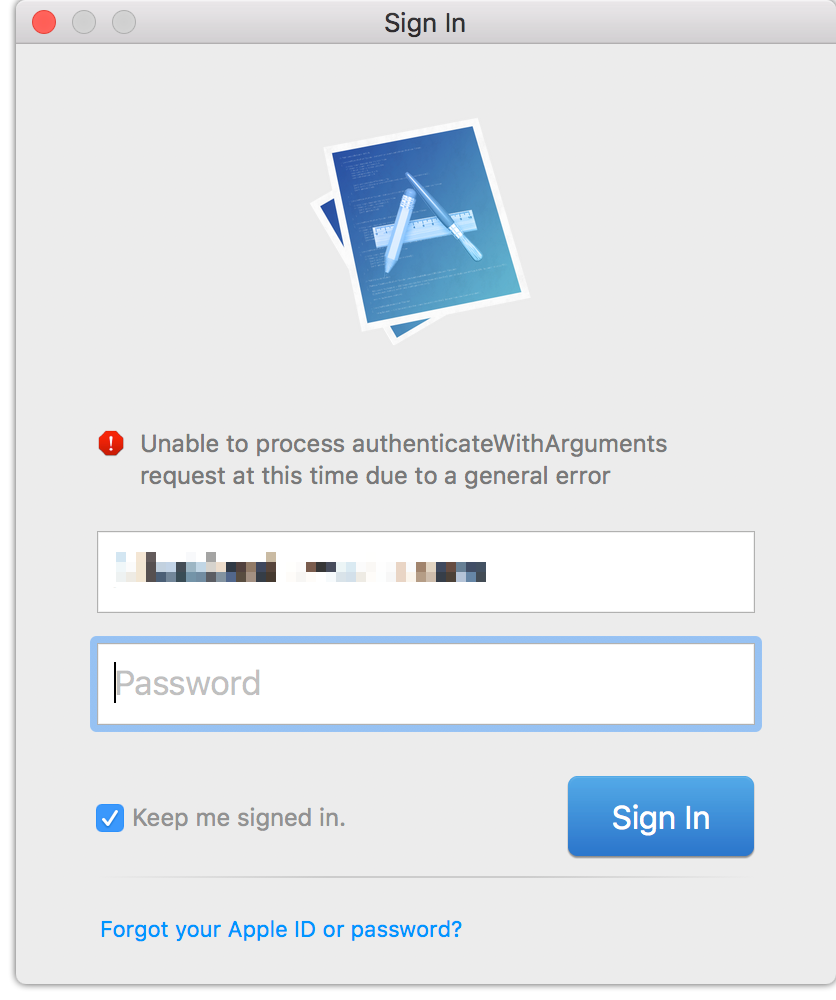

One example on the more arcane, or at least inessential, end of the spectrum, is the Screen Saver plugin interface. Create a new project in Xcode, and choose the “Screen Saver Plugin” template as your starting point. Notice how unlike most templates, Xcode doesn’t even offer a choice of language. Your source files will be Objective-C. At least they’re giving you a hint here.

On the more mainstream end of the spectrum are plugins such as System Preferences panels and QuickLook Plugins. Depending on the type of app you are developing, it may be essential, or at least very well-advised to implement one of these types of plugins. So what do you do if you have an existing Objective-C app that you want to port to Swift, or you are writing a Swift app from scratch, and need to support one of these plugin formats? In the case of System Preferences panels at least, you have a couple practical options:

- Implement the plugin code, and all supporting code in Objective-C.

- Move the functionality out of System Preferences and into the host app.

Each of these could be somewhat reasonable approaches for a System Preferences plugin. The content of these plugins is often fairly straightforward, standard UI, and the goal is usually to collect configuration data to convey to the host application. It’s also not unreasonable, and may even be preferable to move such configuration code out of System Preferences and into a native panel inside the host app.

QuickLook Plugins are another beast. Because the goal of a QuickLook Plugin is usually to convey a visual depiction of a native document type, it’s exceedingly common to take advantage of the very classes that present the document natively in the host app. Let’s say you’ve written an app in Swift, FancyGraphMaker. Apple encourages you to implement a QuickLook Plugin so that users will be able preview the appearance of your fancy graphs, both in the dedicated QuickLook interface, and by way of more unique looking icons in the Finder.

But once you’ve written the code to draw those fancy graphs in Swift, you’re locked out of using that code from a QuickLook Plugin. Worse? Finishing touches such as supporting Quick Look are liable to come later in the development of an app, so you’ve probably gone through the decision-making process of writing your app in Swift, before realizing that the decision effectively cuts you off from a key system feature. That’s a Swift Integration Trap.

Although the workarounds are not as straight-forward in this scenario as they are for a System Preferences pane, it is probably still technically possible to leverage Swift code in the implementation of a QuickLook Plugin. I have not tested this, but I imagine such a plugin could spawn an XPC process that is itself implemented in Swift and executes the bulk of the preview-generation work on behalf of the system-encumbered plugin code. The XPC process would be free to link to whatever bundled Swift libraries it requires, generate the desired preview data, and message it back to the host process. At least, I think that would work.

But I shouldn’t have to think that hard to get this to work, nor should any other developer. The problem with these Swift Integration Traps is twofold:

- If you don’t know about them, you end up stuck, potentially regretting the decision to move to Swift.

- If you do know about them, you might put off adopting Swift completely, or at least put off converting classes that are pertinent to QuickLook preview generation.

Each of these consequences is bad for developers, for users, and for Apple. Developers face a trickier decision process about whether to move to Swift, users face potential integration shortcomings for Swift-based apps, and Apple suffers either reduced adoption of Swift, reduced integration with system services, or both.

I filed Radar #38792518 requesting that QuickLook Plugins be supported by the App Extension model. Essentially, this would formalize the process of putting the generation code in a separate XPC process, as I speculated above would work around the problem. The App Extension system is designed to support, and in fact requires this approach. The faster Apple moves QuickLook Plugins, and other shared-process plugins to the App Extension model, the fast developers can embrace Swift with full knowledge that their efforts to integrate with the system will not be stymied.

Update: Thanks to a hint from Chris Liscio, I have learned that Apple has in fact made some progress on the QuickLook front, but it won’t help the vast majority of cases in which a QuickLook Plugin is used to provide previews for custom file types. It took me a while to hunt this down because it not very clearly documented, and Google searches do not lead to information about it.

At WWDC 2017, Apple announced support for a new QuickLook Preview Extension. It escaped my notice even while ardently searching for evidence of such a beast, because the news was shared in the What’s New in Core Spotlight session. Making matters worse, the term “QuickLook” does not appear once in the session transcript, although it turns out that “Quick Look” appears many times:

Core Spotlight is also coming to macOS and just like on iOS you can customize your preview. On macOS a preview is shown when you select a search result in the Spotlight window. Here you really do want to implement a Quick Look preview extension for your Core Spotlight item because Spotlight on macOS does not have a default preview.

Ooh, this sounds exciting! I’ve wondered over the years why such similar plugins, Spotlight importers, and QuickLook generators, shouldn’t be unified. Although the WWDC presentation emphasizes substantial parity in behavior for QuickLook Previews between iOS and macOS, there is a major gotcha:

Core Spotlight is great for databases and shoeboxes where your app has full control over the contents.

It’s not for items that the user monitors in the finder, for that the classic Spotlight API still exists and still works great.

I beg to differ with that “still works great” assessment, at least in the context of this post. Mac developers who want to integrate with QuickLook must still use a shared-process plugin. It’s still a Swift Integration Trap.